An "otterly" out of left field coding agent moment

My "otter on a plane" moment

“Curiosity is, in great and generous minds, the first passion and the last.” - Samuel Johnson

“If there’s a new way, I’ll be the first in line, but it better work this time.” - Peace Sells - Megadeth

Learning by just doing

I’ve done a lot of serious things in my career - both academically and professionally. Some of those serious things have arisen from just looking at an important problem and thinking “what happens if I try ____?” Other times something started as play cascades through a chain of coincidences to becomes real value.

This leads me to the belief than my learning about something new is accelerated when I pick some random thing to solve (using the new tech or method). Over time I can become more intentional about what problem is “worth it” to focus on solving. Just mucking around with a new technology on some problems you’re personally interested is a great way to start. At least for me. It builds real world intuition that lets me ladder up bigger opportunities. I think that’s why the “random walk” framing I named this substack after appeals to me.

I’ve approached agentic (“vibe”) coding this way with a portfolio of different ideas that are hobby curiosity based rather than "big problems.” From a few small (but not quite toy) problems I’m building a mental path of what to explore next. More on that another day … let’s talk about otters.

Umm, what’s up with the otters? Is everything OK?

Ethan Mollick is fond of pointing out how he evaluates each new generation of visual GenAI with the prompt “otter on a plane using wifi.” The results have gotten downright impressive.

This week I decided to give Google’s Gemini 3 AI Studio coding a whirl - and had some breakthrough success on my own non-otter related benchmark prompt. I’ll admit I was sucked in mainly via curiosity and Google’s mention that it was “free.” But now I’m worrying that they’re going to realize that selling my personal data to everyone on planet earth isn’t going to cover my vibe coding bill. Possibly resulting in me having to start paying for another service.

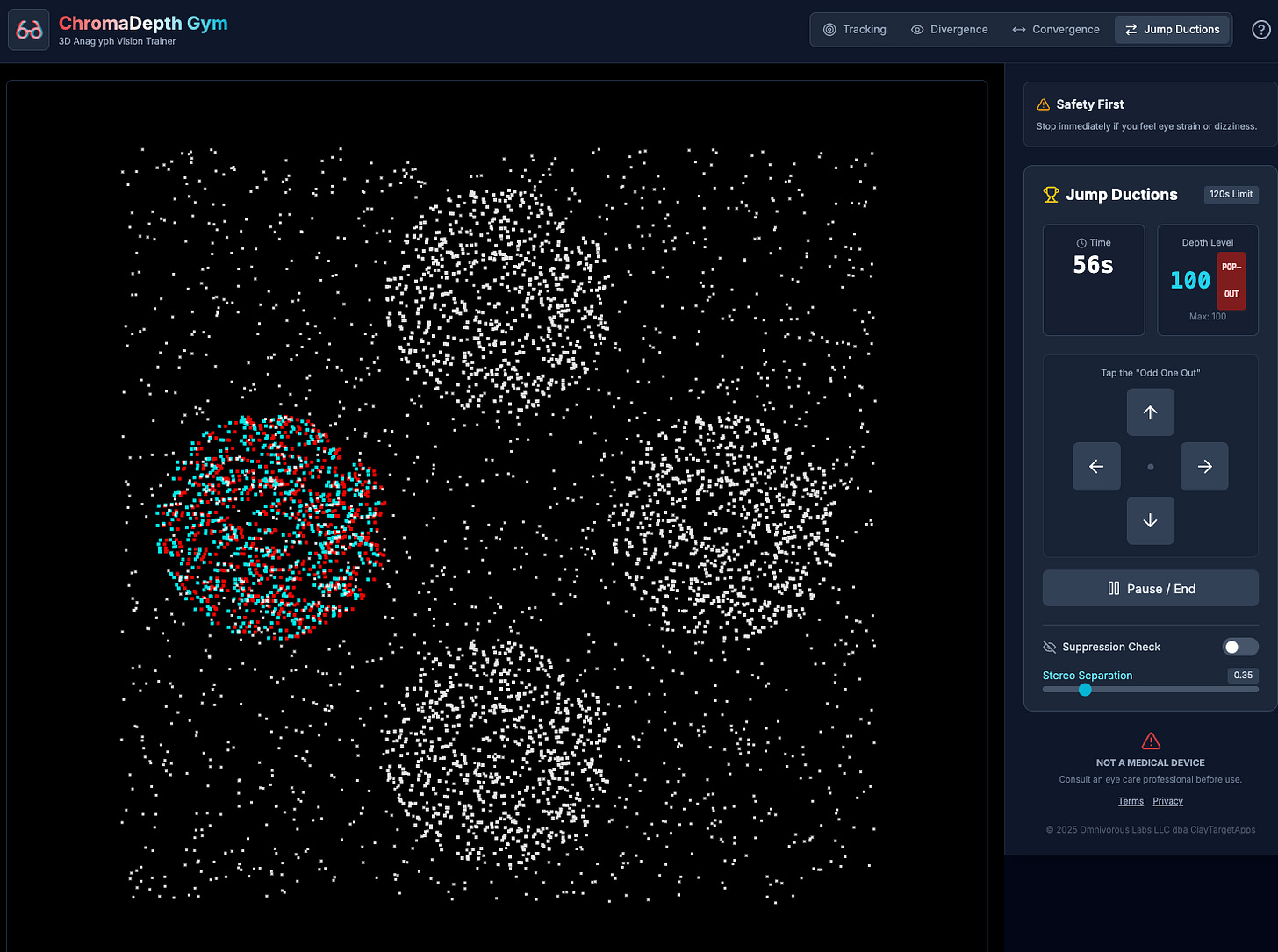

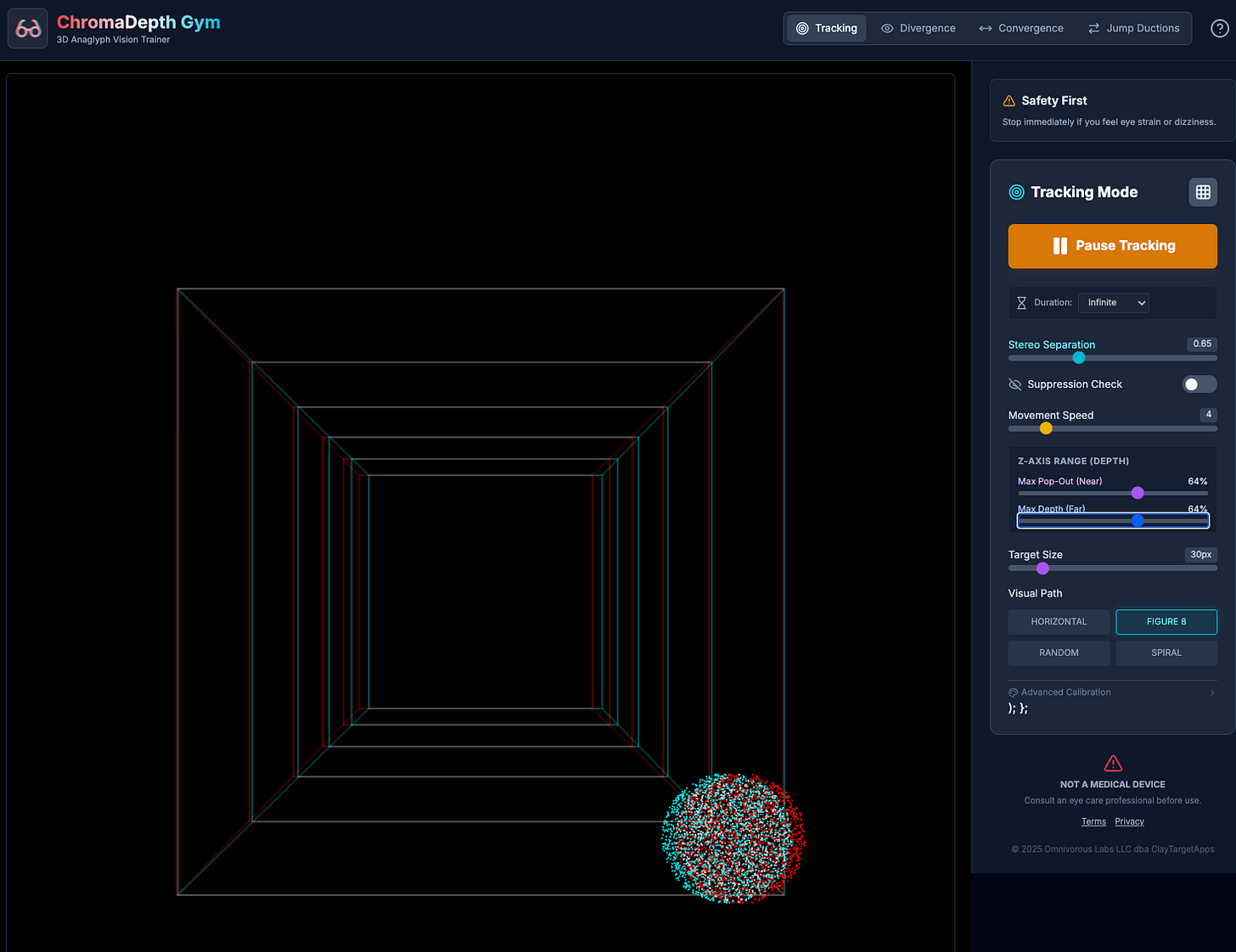

My benchmark test sadly isn’t as catchy as a cute member of the weasel1 family. It’s still quirky, but lacks the mass appeal of everyone favorite slippery (if quite smelly) zoo and sometimes aquarium resident. Instead I seem to keep trying to vibe code a 3D app using those red/blue glasses that went out of favor with cheesy monster movies. Actually a 3D glasses based eye exercise app if I’m being specific - pretty niche, and perhaps sketchy sounding at that.

3D glasses - wait, what? I think the otters made more sense.

Yes - it’s pretty niche. But there’s serious science behind the exercise part, ie; not sketchy2.

I’d started late to the vibe coding party - as chronicled in my earlier post. For whatever reason that I don’t really recall, when I first got to working on Bolt I typed something along the lines of below into the prompt box;

Using standard red/blue glasses I’ve like to practice tracking 3D rendered discs across a variety of visual paths and speeds. These should be configurable by the user. Ranging in simulated distances to within a few feet out to 30 yards. In addition in a separate path I’d like to use the analglyph approach to work on improving my ability to diverge my eyes at distance (with a variety of practical, fun and safe settings).

It seems pretty random. But there was some method to the madness.

I’d been doing some vision therapy that had improved my dynamic vision. I won’t elaborate much on this vision rabbit hole beyond this paragraph. I’d identified my ability to visually diverge and converge as something to improve as part of some sporting hobbies3 and I’d been working on it. In the process I’d used some online (and virtual reality based) tools that had been recommended. They all didn’t seem that technically complicated - but were pretty darn expensive for subscriptions. I wanted to have access to some of the tools, but didn’t want to pay. I’d thought about writing my own - therefore it was on my mind when I tried the first AI coding tools (and needed something to ask it to build).

Given (1), I also I figured there was “like totally no way” an AI could code something that niche. May as well swing for the fences when testing the tech that might render my sometimes profession obsolete.

As it turns out, the first time I tried this was with Bolt and I almost fell out of my chair that what was produced mostly worked. In the sense that the output created moving visual targets you could follow with your eyes and deploy the app, etc. However, it really didn’t solve for the important part of rendering working 3D anaglyphs. It failed miserably at that part, and I simply could not talk it into something functional. I’ll admit I breathed a sigh of relief that humans might still count for something a little while longer

Since then, I’ve had good success using modern agentic coding tools for everything from deep dive legacy system exploration to several app based personal project. But I’ve continue to test this pet project idea, without success, on each vibe coding platform I’ve taken for a spin. Which has included among others; Cursor, and Claude Code.

Until now.

Why hello there Google Gemini 3 Pro free trial…

As the book Vibe Coding reminds readers - the AI you’re using to code right now is probably the worst version of the technology you’re likely to encounter going forward. In that spirit, when I got an email from Google saying there was something new to try .. I totally ignored it for most of the day. Frankly, who doesn’t get an email offering some new AI tool to check every 13 minutes. And that’s not even including the hellscape of AI hype that is LinkedIn these days.

But at some point the cheapskate grad student that’s in me thought “free”? Well - let’s at least click on the link and see what Google is spending all the money from exploiting my personal data on?4

If you’ve been even half paying attention you can probably guess what I decided to try first here. Yep - the prompt above about the anaglyph and vision stuff. Now, either I’m getting a lot better at prompting - or these Gemini 3 is a solid leap forward on the arcane benchmark that no one but me uses. I’m guessing the latter.

The biggest difference between the Gemini 3 experiment and tools I’d used so far was it’s ability to (as the kids say) “understand the assignment.” Less about making things move around but ensuring the 3d visual effects actually worked. No other attempt I made has come close - and in a few hours I feel I have something that works well enough for personal use. I’m going to keep tweaking it and maybe it will eventually provide value to others as well.

It’s not perfect - after several hours of playing with it there are some odd regressions, or at least diminishing returns. For example a propensity to dump evolving code directly into the context window (instead of responses) and a continual forgetting to essentially “ship” the changes. But I’m going to soldier on because it seems clear at least the basics work and I’ve never gotten that far before5.

The overall UI implementation seems very slick, Github integration worked painlessly and there seem to be a lot of bonus “vibe coding” bits I haven’t yet tried. Based on the positive experience I pretty quickly installed the Google Antigravity IDE to check out later.

What was my point again?

I’m not sure I really have one. Other than to talk about otters, and note again there’s a ton of truth in Yegge and Kim’s observation that the agentic coding system you’re using today is the worst you’re ever going to use going forward. Turns out that sometimes a free offer is worth trying to get you out of a local minima.

I hope that venture capital and the AI arms race will keep funding these likely under priced tools. I’m enjoying this one and hoping no one shows up to ask for another $100/month like I’m kicking over to Anthropic. It’s fun and all - but there’s no big trust fund paying for my learning at the moment.

In case you’re curious about the benchmark’s output, I included a few samples below. They don’t look like much without cheap 3D glasses - but please take my word they work.

Will this be “useful” or get “scale”? - Dunno, but (a) that’s not really my point, it’s just an anchor to explore, and (b) I bet no one asks Ethan Mollick that. ;-)

Update: I played a bit more with Antigravity

TLDR; this Antigravity + Gemini 3 thing has game, and a bit of a sharper attitude than Claude. Just don’t try to pay Google for it.

The AI Studio worked miraculously well. Until it very much didn’t. It didn’t seem to understand it was operating in a cloud environment which had limits it didn’t understand. I had some similar issues when Claude Code threw me $1000 in credits to try their cloud version which similarly didn’t quite understand where it stood relative to The Matrix. But the Google one wasn’t just mildly dumb - it seemed to really lack a lot of ability to problem solve. Which them got me to try their Antigravity IDE product.

Antigravity seemed to work a lot better in terms of not getting stuck in the same gravity well (pun intended) when I asked it to solve the a problem that it absolute said it knew how to do (the LLM had suggested the specific solution fwiw). As opposed to AI Studio’s interface which seemed to suddenly forget how to save file in the cloud environment, Antigravity made pretty short work of it.

Antigravity seems to grab tools more aggressively, or have the ecosystem to better point the user to them than Claude Code. For example rather than wonder if a website was rendering OK it just asked me to install a plugin, possibly sign my soul away, and then it got busy checking stuff directly. I know Claude Code can do it - but Antigravity (in a mostly not creepy way) just got me to do it.

I’m still getting used to how Antigravity threads in request for me to look at stuff, and I haven’t even checked out the full agent management mode - but so far I’m encouraged to keep learning the platform. Which I’m honestly surprised at because I was pretty happy with Claude Code. I guess that’s the power of nailing my otter benchmark.

So far it feels a little less like “pair programming” than Claude Code did. Sort of like I’m getting bossed around by a senior engineer with a bit of attitude. But hey, if it works…

The billing structure for Antigravity has been pretty inscrutable to me. Thanks to Reddit I eventually tracked it down here: https://antigravity.google/docs/plans. TLDR; they’re not charging for it - but it resets every 5 hours and you cannot buy more. I guess when they say free beta they mean “ONLY FREE BETA” - even it you want to pay more. I’m sure they’ll “fix that” soon.

Postscript: Is this like Mallrats with the sailboat?

Short answer: No, not really.

Longer answer: Eyes are incredible things. Yes, we all know because our brains can convert light into a belief that we truly understand the universe in a meaningful way. But they’re also incredible in an ability to defocus a bit and enjoy the sort of MagicEye posters that form a side quest in the criminally under appreciated film Mallrats. Once I had the movie on my mind, thanks to the amazing power of Reddit I was able to find a post that included the famous poster of a ‘Schooner’.

From this I can finally see the 3D picture and also confirm it was not really a sailboat after all. If nothing else all that vision therapy got me to be able to see those hidden images. They’re actually pretty fun, and relaxing in a weird way.

Or the Mustelidae family if you have something against weasels.

This isn’t about making your reading or distance vision better. I’m pretty sure people that claim that are being sketchy as heck. This is about working on your ability to track objects close and far dynamically. Stuff like this.

Yes - I know when folks look at me and I write “sports hobbies” they immediately want to say “wait, I don’t think dungeons and dragons is a sport. Is it?” But we’re all more complex than first impressions. ;-) For the record I haven’t played D&D since elementary school. Not that there’s anything wrong with role playing games of that sort. I’m pretty sure the whole gateway to demonic possession thing is overblown.

I’m of course joking. Given that I know Google has a mission statement about “being no more than 10% evil” I’m being terribly unfair. I’m sure most of their cash machine is from totally useful and good for the world things. I’m probably misremembering that mission statement anyway - it’s probably more like “be only 10% as evil as Meta” or something like that.

I eventually realized that as in many LLM based tools I just needed to clear the context and start over and it worked again.